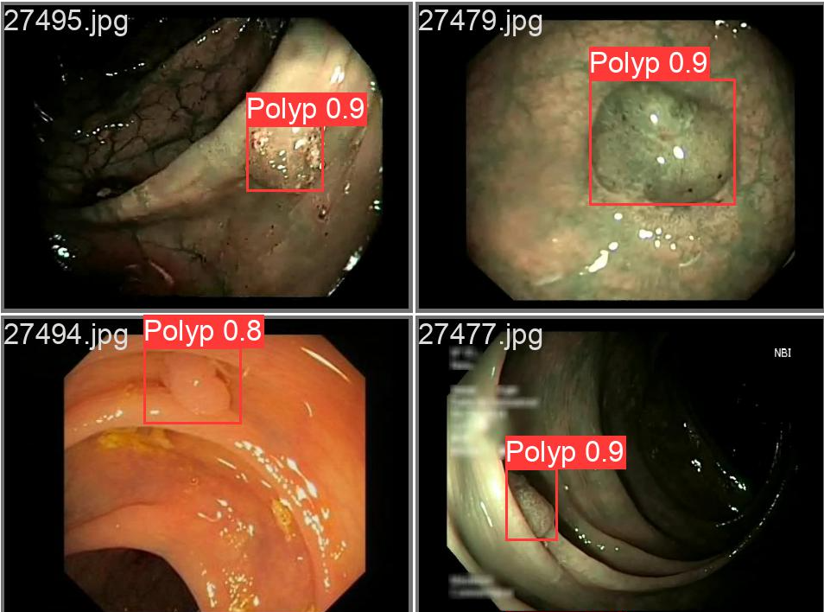

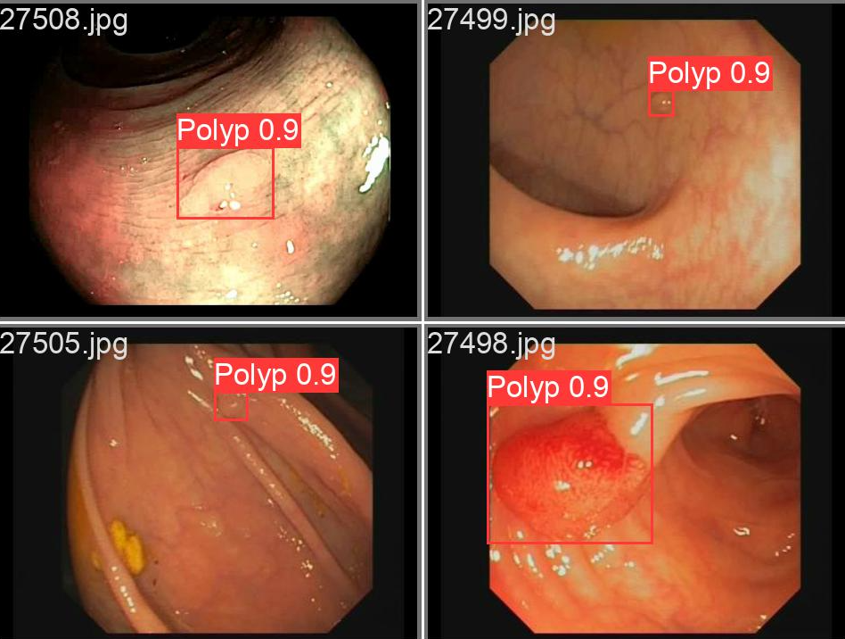

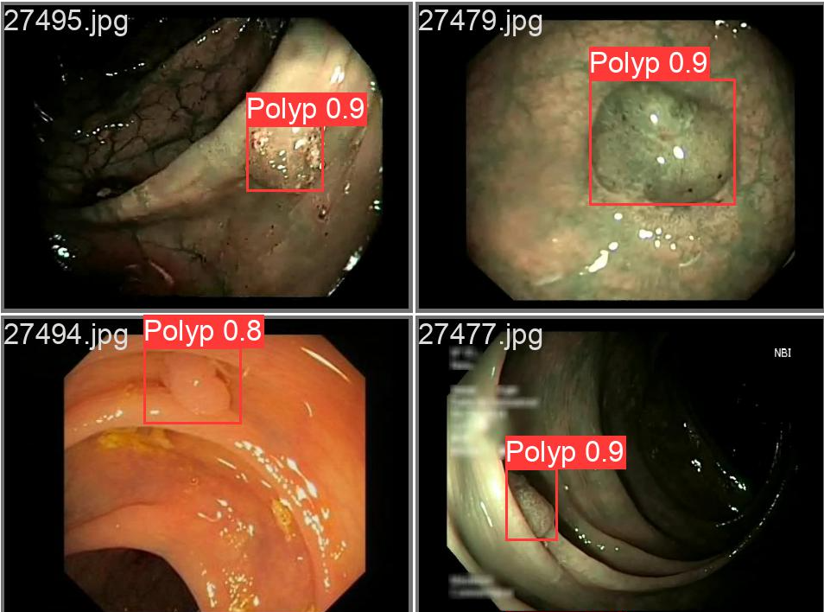

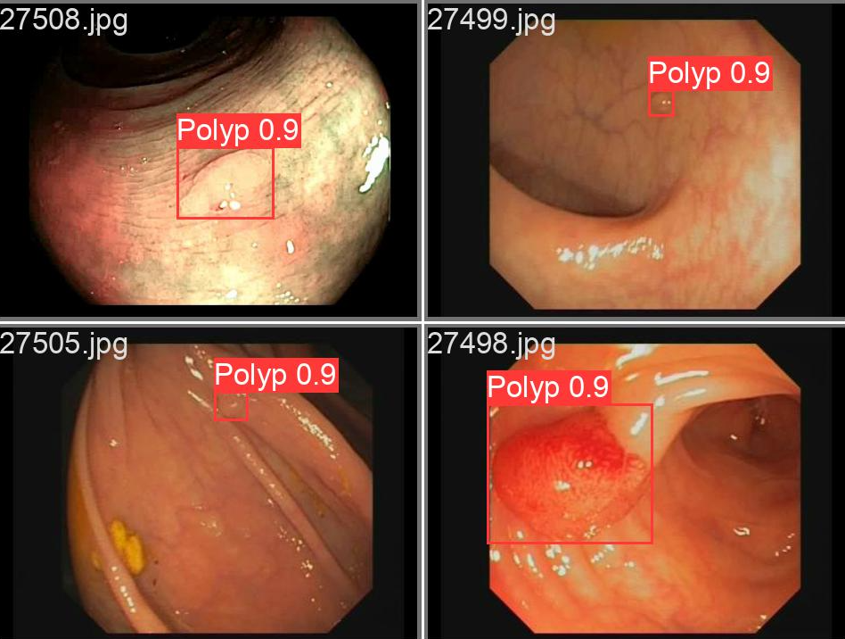

YOLOv5 for Polyp Detection

Artificial Intelligence and Polyp Detection - Evaluation of a Deep Learning-Based, Single-Stage Object Detector using Colonoscopy Videos

Principle Investigator: Dr James Li, Department of Gastroenterology and Hepatology, Changi General Hospital

Introduction and Aim

Artificial intelligence (AI)-assisted colonoscopy systems have been demonstrated to increase adenoma detection rates. However, commercially available systems are often not trained on local data and cannot be modified by end-users after deployment. You-Look-Only-Once (YOLO) is an open-source deep learning (DL) model combining bounding box predictions and object classification. We aimed to evaluate the feasibility of training YOLOv5 to detect polyps in colonoscopy videos.

Methods

The YOLOv5 model was first pre-trained by transfer learning using the Common Objects in Context (COCO) dataset. Bounding boxes of polyps in the KUMC and HyperKvasir datasets were converted from COCO to YOLO labels. Colonoscopy videos from the KUMC dataset were split (first 90% and remaining 10% of images used for training and validation, respectively). The test dataset consisted of the segmentation part of the HyperKvasir dataset. Each image in the final testing dataset had one polyp and one bounding box. Performance of YOLOv5 was evaluated for precision, recall and mean average precision (mAP) at intersection over union (IoU) threshold of 0.5 [mAP(0.5). We also measured mAP at IoU thresholds ranging from 0.5 to 0.95 [mAP (0.5-0.95)].

Results

The DL model was trained over 50 epochs and the final test dataset comprised of 945 images. In the training dataset, precision, recall and mAP(0.5) were 0.997, 0.986 and 0.995 respectively. The precision, recall and mAP(0.5) were similar in the validation dataset (0.998, 0.991 and 0.995 respectively). Precision, recall and mAP(0.5) in the test dataset for polyp detection were 0.757, 0.623 and 0.669 respectively.

Conclusion

The training of YOLOv5 for polyp detection in colonoscopy videos is feasible, with moderate results over 50 epochs on final testing. Further training of this open-source DL model and validation in real-time colonoscopy is required to increase the performance metrics for polyp detection.